Contribute to an Open MCP Directory

Add your custom MCP server to our directory and contribute to the growing ecosystem.

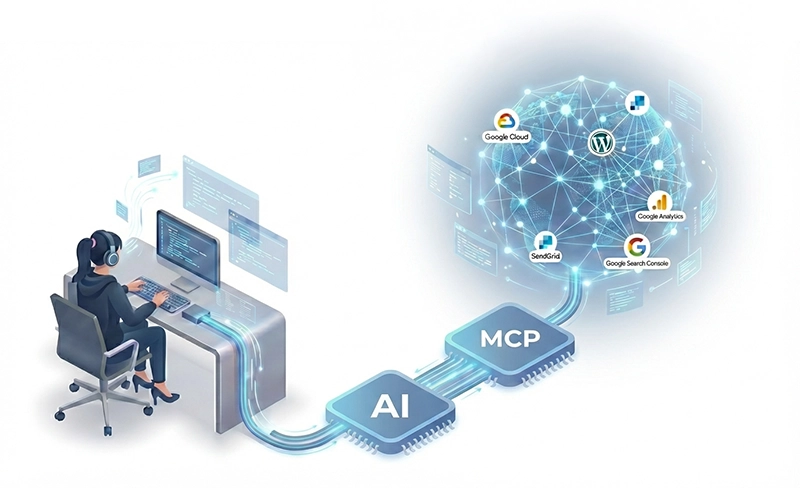

Introducing MCP Data Connectors

Model Context Protocol (MCP) is an open standard that enables seamless communication between AI models and external tools, services, and data sources.

Explore prototypr.ai's featured MCP servers that are ready to enhance your AI's capabilities

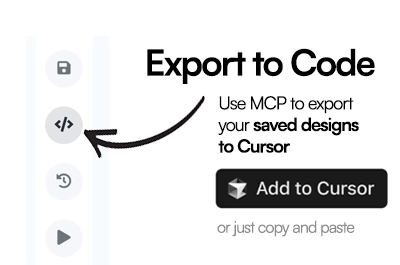

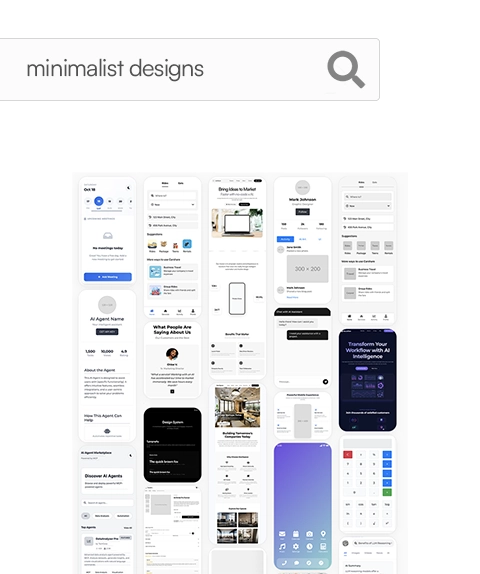

Easily search and export designs from your workspace and community marketplace with MCP. Access all your design assets through natural language queries.

View Prototypr.ai MCP DetailsA custom Google Analytics 4 Agent as an MCP server. Powered by a fine tuned GPT Model and the Google Analytics API.

Learn about GA4 Agent MCPAn Open Source Google BigQuery MCP server that enables teams to explore their BigQuery Datasets and run sql queries using natural language.

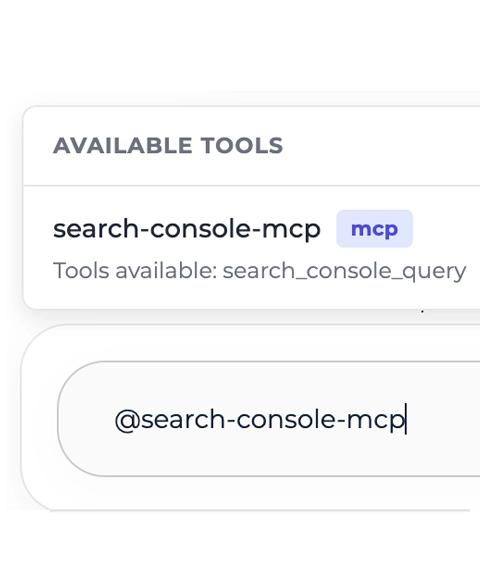

Download on GithubAn Open Source Google Search Console MCP server that enables you to query the GSC API with natural language and get insights into the performance of your SEO initiatives.

Download on GithubAn Open Source Sendgrid MCP server that enables you to save html templates to sendgrid, pull a list of templates and get statistics for about the performance of your email marketing program.

Download on GithubAn Open Source WordPress MCP server that enables teams to deploy web pages created in prototypr.ai Studio in addition to importing image assets to remix with Nano Banana.

Download on Github

Transform your AI capabilities with these key benefits

Connect your AI models to any MCP tool or service with standardized, reliable connections that work out of the box.

Give your AI models access to live data and the ability to take actions, making them truly interactive and responsive.

Secure your MCP integrations with authentication and fine-grained access controls built into the model context protocol.

MCP eliminates friction by connecting LLMs to data services through a standardized protocol that works across any service.

MCP servers provide LLMs with new tools and capabilities that can enhance your stakeholder's or customer experience and trust.

Join a growing ecosystem of tools, services, and developers building innovative solutions on top of the leading standard that is MCP.

Streamline design hand-offs with prototypr.ai MCP by exporting your designs from your workspace into IDEs like Cursor or any other MCP Client.

Community driven designs makes prototypr.ai better for everyone. And even more so, when you can discover and import these designs and templates from your favourite IDE.

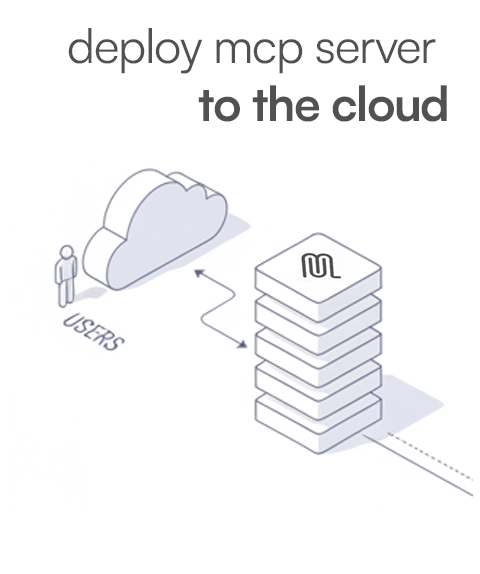

Data Transparency and Trust are critical when working with AI Agents and MCP. That's why prototypr.ai is working to build open mcp servers that you can deploy on your own infrastructure and control access.

Built in flask with python, this Open Source Google Search Console MCP server is available to download on Github and ready to deploy on your own infrastructure.

Download on GithubAdd your custom MCP server to our directory and contribute to the growing ecosystem.

Get started with building a simple MCP server that can act as a bridge between systems. Create a pure python MCP server built on top of Flask and learn how you can modify it for your own purposes. Code included.

Read How to Build a MCP ServerEverything you need to know about MCP

Integrating MCP into your application and workflows offers key benefits:

Less Context Switching: MCP users can tay in their workflow while AI connects to tools in the background.

Ease of Integration: MCP is an open standard that simplifies connecting AI agents to your existing tools, APIs, or databases.

Enables New Distribution Channels: MCP enables your service to reach more users across AI platforms that are MCP compatible.

Cross-Tool Compatibility: Works consistently across platforms like Claude, Cursor, OpenAI’s Responses API and prototypr.ai.

Open Source: MCP is built on open standards, fosters transparency, collaboration, and community‑driven innovation. MCP allows anyone to build compatible tools without licensing barriers.

MCP tackles the core limitations of current AI integrations and directly addresses:

Disconnected data: Traditional LLMs can’t access live business systems. MCP fixes that by standardizing how models access data and services

Integration inefficiency: Instead of hardcoding multiple APIs into each AI app, MCP provides a universal interface

Security risks: You define and authenticate exactly which tools/models can exchange data through your MCP server

Maintenance overhead: Extending functionality no longer means rewriting APIs. WIth MCP, new capabilities can be added in a modular fashion

APIs expose “endpoints” that people can hit and receive a deterministic response. MCP exposes capabilities (ie. tools, resources, and prompts) that assistants can discover, understand, and then use as part of a larger workflow. Your app doesn’t just answer a question once, but actively participates in a conversation managed by the assistant, across multiple steps and contexts.

Source: How to Build a MCP Server - A Practical Guide for Developers - prototypr.ai

MCP supports multiple authentication methods including OAuth 2.0, API keys, and mutual TLS. A good resource to read is OpenAI's Responses API documentation, which details how developers can handle authentication with an MCP server.

An MCP Server acts as a bridge between your data and your AI model, exposing your application's functionality as 'tools' that AI Agents can discover and use. It translates your APIs, data, or functions into MCP-compliant services that any compatible client (like Claude, Cursor, OpenAI or prototypr.ai) can use via natural language requests.

MCP Clients are AI interfaces that can connect to MCP Servers to access external data or actions securely. They interpret natural language into MCP requests.

Some examples of MCP Clients include: Anthropic’s Claude, OpenAI’s Responses API, and the one in the prototypr.ai AI Workspace, all of which can request live information using the MCP standard.

In order to connect an MCP Client to an MCP server, you need to pass in credentials to grant access. This typically involves adding a mcp.json file or object to the client, which in the case of the prototypr.ai MCP client looks like:

// mcp.json example

{

"mcpServers": {

"prototypr": {

"url": "https://www.prototypr.ai/mcp",

"displayName": "Prototypr AI",

"description": "Prototypr AI is a research platform that helps people build, measure and learn faster with leading LLMs.",

"icon": "https://www.prototypr.ai/static/img/ai_icon_32x32.png",

"headers": {

"Authorization": "Bearer API_KEY"

},

"transport": "stdio"

}

}

}

Since the prototypr.ai MCP server requires authentication, you would need to generate an API Key from within the platform.