fine tuning an AI model, minimalist abstract art, white background - DALL·E 3 via prototypr.ai

Fine Tuning AI Models - A Practical Guide for Beginners

- What is fine tuning? Fine tuning is a technique used to improve the performance of a pre-trained AI model on a specific task.

- How do I use it? This beginners guide will walk you through the steps for how to get started with fine tuning GPT-4o mini

- Are there any pre-requisites for this article? Having some prompt engineering, basic programming and spreadsheet experience is helpful for understanding how to fine tune an OpenAI Model.

Introduction

I learned a lot fine tuning my first AI model.

It was January 2024. GPT-3.5-Turbo was the leading model for fine tuning at the time.

I tested it. I learned. And it was a wonderful thing.

I have since used the process outlined in this post to create a fine tuned GPT that powers a Google Analytics dashboard generator and helps me answer questions about my data. But that is a story for another post.

Today, i'm excited to walk you through my original use case for fine tuning with the all new GPT-4o mini, which is the latest model from OpenAI.

But, what is fine tuning? How does it work? And how can you use it to improve performance over an out of the box GPT?

This tutorial aims to walk you through how to get started with fine tuning GPT-4o mini using OpenAI's Fine Tuning Platform. I'll show you a very specific use case that I'm currently working on at prototypr.ai. This post is geared towards people who want to learn how they can get started with fine tuning. As part of this tutorial, I assume you have expereince with prompt engineering, basic programming and know how to use a spreadsheet. So if this is you, please read on!

Let's get started!

Table of Contents

This guide will cover the following:

What is fine tuning?

Fine tuning is a technique used to improve the performance of a pre-trained AI model on a specific task.

If you are familiar with prompting ChatGPT using examples of inputs and outputs, then you are already halfway there to understanding how to fine tune an AI model. If you need a refresher or are new to prompt engineering, I’d recommend checking out OpenAI’s prompt engineering best practices guide before jumping into the wonderful world of fine tuning.

The process of fine-tuning a model involves continuing the training phase, where the model is adjusted with a smaller and more specialized dataset. This task-specific training enhances the model’s existing knowledge, enabling it to perform better on a particular task while still retaining the broad understanding it acquired during its initial training on large datasets.

Benefits of fine tuning

Fine-tuning provides several benefits.

One major advantage is efficiency; fine tuning requires less data and computational resources than training a model from scratch. This is because the model already understands the general nuances of human language.

Another benefit is performance optimization.

Strategically fine tuning a model can drastically improve task-specific performance, leading to faster, more accurate and relevant outputs.

Use Cases for Fine-Tuning AI Models

There are a myriad of applications for fine tuned models that span across industries and functions.

In customer service, fine-tuned chatbots can provide swift and accurate support, reflecting a company's specific knowledge base.

While in the media and entertainment industry, content generation can be enhanced with fine tuned models, personalized to a brand's style and tone.

Fine-tuning OpenAI Models

OpenAI has a number of models that can be fine-tuned with proprietary data to help accelerate their performance and specialize in completing specific tasks.

According to OpenAI fine-tuning documentation, there are a number of models that can be fine tuned. These include:

- gpt-4o-mini-2024-07-18: with a 65k training context window

- gpt-3.5-turbo-1106: with a 16k context window

- Gpt-3.5-turbo-0613: with a 4k context window

- babbage-002

- davinci-002

OpenAI also mentions that you can fine tune a fine tuned model which sounds super useful! Especially, since you won't have to retrain the model again, enabling you to save of training costs.

Tutorial: Getting started with fine-tuning using GPT-4o mini

For this tutorial, I’m going to walk you through a very specific use case for how I’m applying fine tuning at prototypr.ai.

Think of this blog post as a framework that you can repurpose for your own fine tuning initiatives. It will cover the following steps:

- Identify the problem that fine tuning can help address

- Acquiring data for fine tuning

- Fine Tuning GPT-4o mini in the OpenAI UI

Step One: Identifying the Problem You Want to Solve with Fine-Tuning

Building startups and testing ideas is hard. Finding product-market fit is even harder. It’s incredibly difficult, resource and capital intensive.

One of the solutions that I’ve been exploring to make the startup journey easier is to use AI to rapidly generate digital prototypes. Once I have a prototype made, I can polish it up and test it in-market in a fraction of the time.

And so I created an AI UI Generator affectionately named ui_w/ AI Studio. It’s powered by some incredible leading large language models such as OpenAI’s incredible GPT-4 Turbo model and Anthropic's Claude 3.5 Sonnet. And with a bit of crafty prompting, it can produce wonderful prototypes that you can test on the web.

I really love the product, but I have a hypothesis that the longer the time-to-ui generation is, the more subpar the user experience which results in higher churn.

So I’ve turned to fine tuning to help me:

- Reduce Latency

- Improve Output Style Quality

- Improve Natural Language Editing Capabilities

Originally, I was hoping that GPT-4 Turbo would help with these areas, and it does work extremely well editing a UI with natural language, but latency is still my biggest concern in production.

So let me show you the steps I recently did in order to fine tune GPT-4o mini. And hopefully you can apply these learnings to your own AI initiatives.

Step Two: Acquiring data to fine tune a model

Acquiring data to fine-tune GPT-4o mini was probably the most intensive part of the entire process.

Over the past few months, I've been using GPT-4o and more recently Claude 3.5 Sonnet to generate different user interfaces. I iterated and edited these experiences in ui_w/ AI Studio and exported code for over 30 different prototypes. I then consolidated the prompts I used along with a system message and started to build a spreadsheet of examples to train the model on.

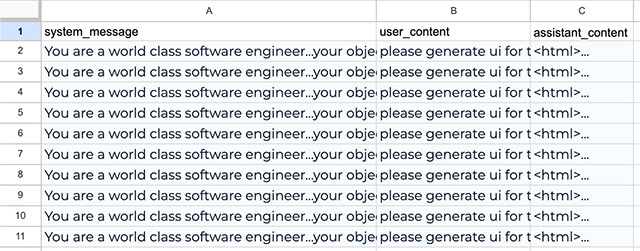

The data is currently proprietary, but the spreadsheet of examples looked something like this:

It contained 3 columns:

- system_message: This is the primary instructions or prompt guide you want the model to follow

- user_content: This is user input

- assistant_content: This is the model output

Once I had 30 examples, I exported the spreadsheet as a csv. I then converted the csv into a required jsonl format and subsequently uploaded it into OpenAI’s Fine Tuning UI.

SIDE NOTE: It is recommended that you should try and acquire at least 50-100 examples, but depending on the quality of your examples you may start seeing benefits. The minimum number to start fine tuning with is 10. So, start with a number of high quality examples, understand how the model performs and iterate on your fine tuned model. I'm definitely working towards getting over 100 examples to fine tune with for this use case.

Now, if you are familiar with the a chat completions api, the jsonl file should look very similar as shown below:

{"messages": [{"role": "system", "content": "You are a world class software engineer…."}, {"role": "user", "content": "please generate ui for…"}, {"role": "assistant", "content": "...."}]}

{"messages": [{"role": "system", "content": "You are a world class software engineer…."}, {"role": "user", "content": "please generate ui for…"}, {"role": "assistant", "content": "...."}]}

{"messages": [{"role": "system", "content": "You are a world class software engineer…."}, {"role": "user", "content": "please generate ui for…"}, {"role": "assistant", "content": "...."}]}

Let’s breakdown how I converted the csv of training data into a jsonl file.

How to convert a CSV of Training Data into a JSONL File

At this stage, I’m going to assume you are familiar with Jupyter notebooks. If not, it’s a great tool for exploring and manipulating data. I actually do quite a bit of data exploration in these notebooks as I find them very easy to use. It’s highly recommended.

The first thing you’ll need to do is import the necessary python modules. We are going to need pandas for importing the csv and the json module for helping to convert it into an appropriate shape so that we can save the fine tuning data as jsonl.

# Import modules

import json

import pandas as pd

Next, let’s import the csv we created and convert it into a list of python dictionaries.

# Open csv

csv_training_data = pd.read_csv('fine_tuning_demo.csv')

# Convert to JSON String

csv_training_str = csv_training_data.to_json(orient='records')

# parse JSON string and convert it into a list of python dictionaries

training_dict = json.loads(csv_training_str)

Now let’s create two functions:

- format_for_fine_tuning() - this transforms the training_dict above into the appropriate shape we need for fine tuning

- convert_to_jsonl_and_save() - this takes the output of format_for_fine_tuning() and saves our data as a jsonl file.

Here are those two functions:

def format_for_fine_tuning(data):

"""

Background: This function helps format data into a list of dicts into the required shape for fine tuning

Params:

data (list): list of dicts

Returns:

training_data_list (list): a list in the proper format for converting to jsonl

"""

training_data_list = []

for x in data:

updated_data = {

"messages": [

{

"role": "system",

"content": x['system_message']

},

{

"role": "user",

"content": x['user_content']

},

{

"role": "assistant",

"content": x['assistant_content']

}

]

}

training_data_list.append(updated_data)

print(training_data_list)

return training_data_list

And here's the code for converting the data into a jsonl file:

def convert_to_jsonl_and_save(data_list, filename):

"""

Background:

This function converts the data_list provided into a jsonl file

Params:

data_list (list): a list of a dict ready to convert to jsonl

filename (str): the name of the filename we want to convert

"""

with open(filename, 'w') as file:

for data_dict in data_list:

# Convert each dictionary to a JSON string

json_str = json.dumps(data_dict)

# Write the JSON string followed by a newline character to the file

file.write(json_str + '\n')

print(f"Data has been written to {filename}")

Now let’s run these two functions and we have our jsonl file that we can use to start fine tuning in the OpenAI UI.

# format dict into shape for fine tuning

fine_tune_formatted_data = format_for_fine_tuning(training_dict)

# Save as jsonl

convert_to_jsonl_and_save(fine_tune_formatted_data, 'ui_training_data.jsonl')

If everything worked correctly, you should see the message:

”Data has been written to ui_training_data.jsonl”

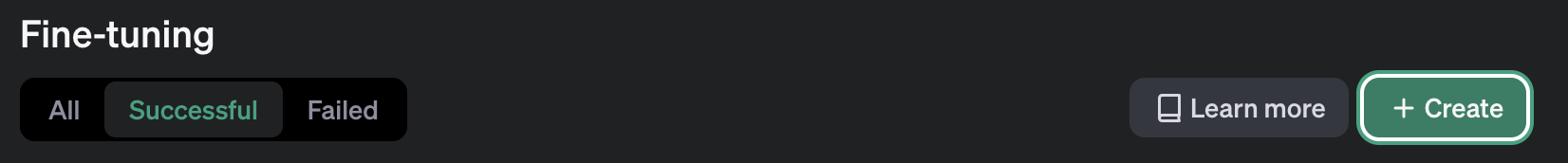

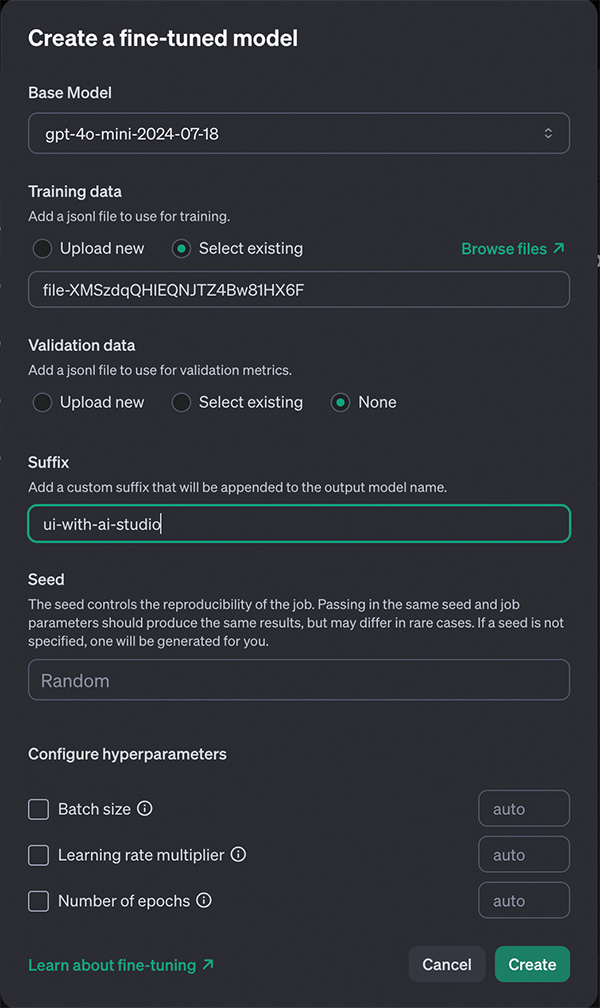

Step Three: Fine Tuning GPT-4o mini in the OpenAI UI

With our new jsonl file in hand, let’s login to the OpenAI platform and navigate to ‘Fine Tuning’ in the left side menu.

Then click on Create New CTA:

This will open up a fine-tune model selection menu.

Select the gpt-4o-mini-2024-07-18 as your base model and then upload the your json_l where you can upload your jsonl file.

Then click on the Create CTA.

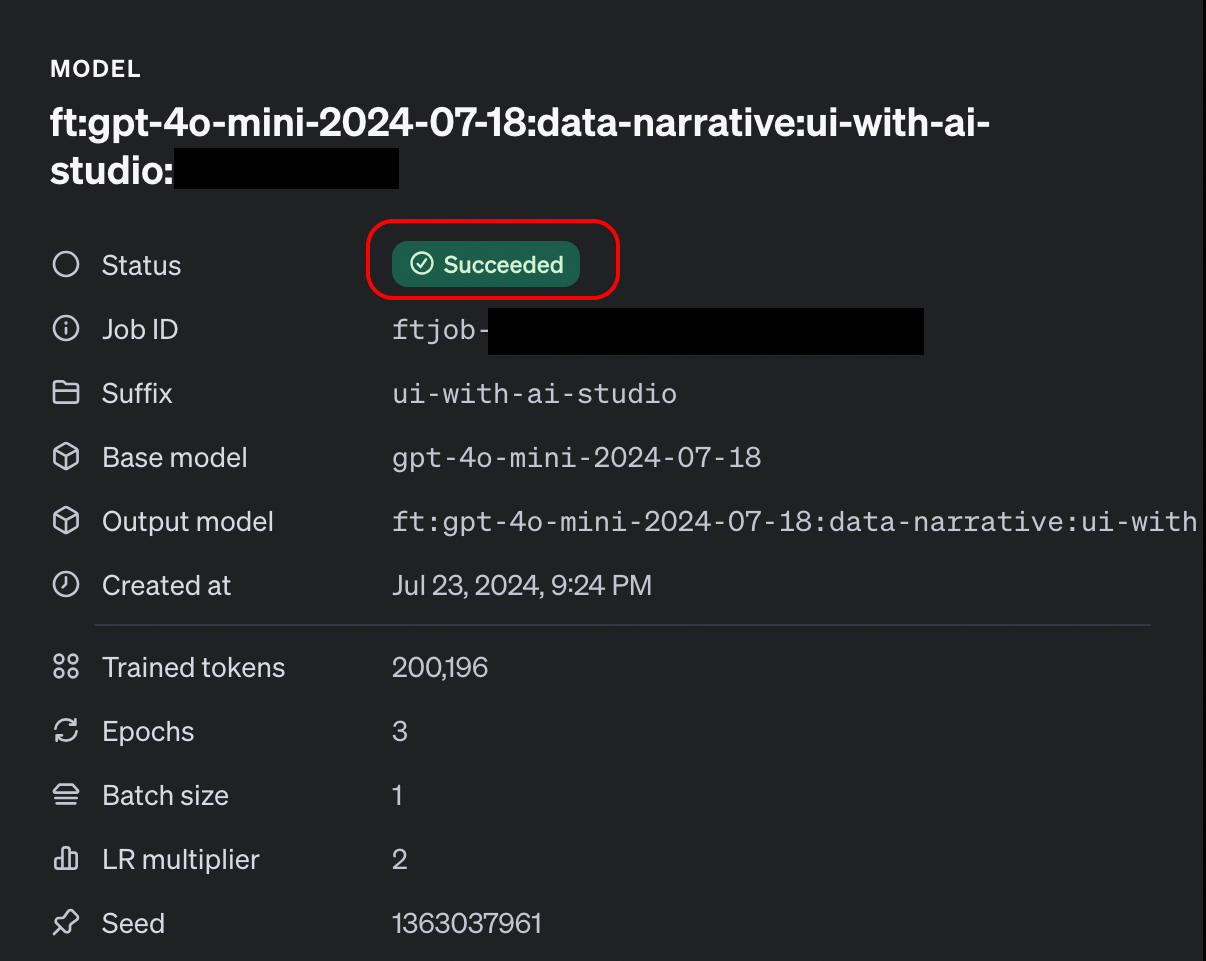

Now the fine tuning job will start. When I trained it, the training job took about 5 minutes to complete, which was pretty good. I’d assume the more examples you have the longer it will take. OpenAI does send you an email notification when you fine tuning job is done, so you don’t have to stick around and wait for it to complete.

If all goes well, you should see a success notification with the new model that you can use.

You can now copy and paste the new fine tuned model into a chat completion request and start using it \o/

# OpenAI GPT-3.5 Chat Completion Request

completion = openai.ChatCompletion.create(

model=insert_fine_tuned_gpt_model,

messages=message_data

)

Next Steps: Testing the Fine Tuned Model and Iterating on the Results

Now it’s time to test out your new model and see if the model helps solve your problems that we defined earlier.

OpenAI recommends that to start seeing significant performance improvements you will need between 50-100 examples. So I’m excited to keep iterating and making the performance better.

In the meantime, i’ve updated ui_w/ AI Studio to use the new fine tuned model. You can try it out for yourself in the app by clicking on the options cta and then selecting GPT-4o mini Fine Tuned.

As I continue tweaking the model, I'll be sure to post any updates here or on my LinkedIn profile.

That's all for now. Thanks so much for reading.

Happy Fine Tuning!

About the Author

Hi, my name is Gareth. I’m the creator of prototypr.ai and the founder of a startup called Data Narrative. If you enjoyed this post, please consider connecting with me on LinkedIn.

If you need help with your fine tuning initiatives or are looking into building with AI, please feel free to reach out. I love building GenAI and end-to-end measurement solutions that help businesses grow.

Until next time!